- Remove the current class from the content27_link item as Webflows native current state will automatically be applied.

- To add interactions which automatically expand and collapse sections in the table of contents select the content27_h-trigger element, add an element trigger and select Mouse click (tap)

- For the 1st click select the custom animation Content 27 table of contents [Expand] and for the 2nd click select the custom animation Content 27 table of contents [Collapse].

- In the Trigger Settings, deselect all checkboxes other than Desktop and above. This disables the interaction on tablet and below to prevent bugs when scrolling.

We live in an era of measurement. Small, powerful devices strapped to our wrists and fingers have transformed our understanding of the human body, turning abstract concepts like "health" into a dashboard of legible data. We track our steps, our heartbeats, our sleep cycles. These wearables have given us a new language for discussing our physical selves, moving healthcare from episodic snapshots in a clinic to a continuous, flowing narrative.

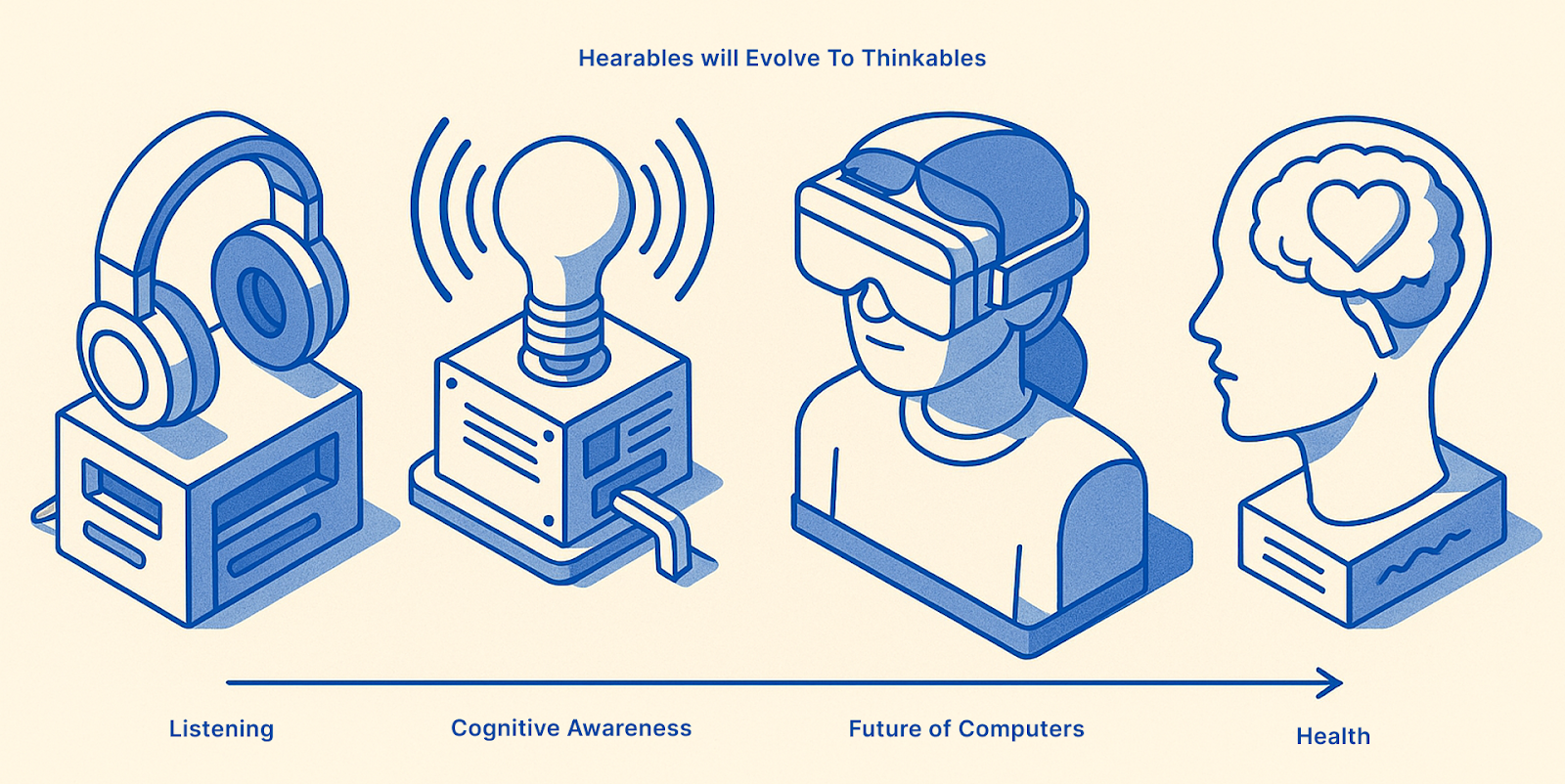

But for all their power, today’s wearables are fundamentally passive. They are historians, meticulously recording what our bodies have done. They tell us we slept poorly or that our heart rate spiked during a meeting. They are brilliant data collectors, but they are not yet true partners in navigating our world. They are wearables, not thinkables.

This distinction is the key to unlocking the next great leap in human-computer integration. The future requires an invisible interface, a technology that can understand our intent before it becomes a word or a gesture. This is the transition from wearables to thinkables: devices that don't just measure our bodies, but that listen to our minds.

The Technology Catching Up to the Vision

This isn't science fiction. This is the culmination of a technological evolution that has been quietly accelerating for decades. Brain-sensing technology, or electroencephalography (EEG), began in 1929 as a massive laboratory machine. It wasn't until the 1990s that the first portable EEG became commercially available, and the 2000s saw the first consumer headbands designed to help people meditate by giving them real-time feedback on their brain activity.

Now, we are in the age of integration. Driven by advances in miniaturization and AI, brain sensors are leaving the lab and disappearing into the devices we already wear every day. Headphones, earbuds, and smart glasses are becoming the first generation of thinkables, for two simple reasons: they are socially accepted, and their position on the head is the perfect real estate for listening to the brain.

At Neurable, we've spent over a decade engineering our way past the deadlock of traditional EEG. We started with the hardest problems in neuroscience and built an AI platform that can extract clean, meaningful signals from just a few sensors. This is what makes a product like the MW75 Neuro possible.

The First True Thinkable: MW75 Neuro

The MW75 Neuro LT, our partnership with premium audio brand Master & Dynamic, is a concrete example of this transition. It isn't a strange new gadget you have to learn to wear; it's a pair of high-end, noise-canceling headphones. But embedded within the soft fabric of the ear pads are 12 EEG sensors that measure your brain activity as you work, listen, and live.

This isn't just data for data's sake. Our AI translates those signals into actionable insights you can see in the Neurable app.

- Proactive Health Monitoring: The MW75 Neuro LT tracks your focus levels in real-time. It can see when you are in a deep state of flow and when your cognitive energy is starting to wane. Before you even feel the exhaustion, the app can suggest you take a "brain break," helping you manage your energy and prevent burnout. Over time, it helps you understand your own cognitive rhythms, when you do your best work, what environments help you focus, and how your habits impact your mental performance.

- Active Cognitive Training: Thinkables are not just passive monitors; they are active training tools. Our app includes the "Rocket Game," a simple but powerful biofeedback tool where you control the speed of a rocket with your mind. The more you focus, the faster it goes. For someone like me with ADHD, this is life-changing. It’s not just a game; it’s a way to learn what focus feels like, giving you the ability to consciously control your attention without medication.

The Next Frontier: Augmented Reality

The MW75 Neuro is the first step. The next logical platform for thinkables is augmented reality (AR). Today, the AR industry is facing the same challenge the personal computer did before the mouse: it lacks a killer interaction method. Current AR glasses rely on hand gestures, voice commands, or small touchpads all of which can be clunky, slow, and socially awkward.

A thinkable interface solves this. Companies are already making this a reality. AAVAA, for instance, is developing BCI systems for smart glasses that allow users to control devices with a simple blink or eye movement. This is the beginning of a hands-free, voice-free, and truly intuitive way to interact with the digital world.

Imagine a future where your AR glasses are a thinkable device:

- You see a product in a store and think about it; the information appears seamlessly in your field of view.

- You're in a noisy environment and need to answer a call; you do it with a thought, without ever reaching for your phone.

- You're a surgeon or an engineer who needs to pull up schematics; you navigate menus with your mind, keeping your hands free for critical tasks.

This is where technology is heading, a world where our devices don't just respond to our commands but anticipate our needs and understand our intent.

The transition from wearables to thinkables is not just the next phase of technology. It is the next phase of the human experience. It is a future where our technology is more personal, more accessible, and more deeply human. It is a future where we defy our limitations.

This future belongs to all of us. It is our heritage as dreamers.

2 Distraction Stroop Tasks experiment: The Stroop Effect (also known as cognitive interference) is a psychological phenomenon describing the difficulty people have naming a color when it's used to spell the name of a different color. During each trial of this experiment, we flashed the words “Red” or “Yellow” on a screen. Participants were asked to respond to the color of the words and ignore their meaning by pressing four keys on the keyboard –– “D”, “F”, “J”, and “K,” -- which were mapped to “Red,” “Green,” “Blue,” and “Yellow” colors, respectively. Trials in the Stroop task were categorized into congruent, when the text content matched the text color (e.g. Red), and incongruent, when the text content did not match the text color (e.g., Red). The incongruent case was counter-intuitive and more difficult. We expected to see lower accuracy, higher response times, and a drop in Alpha band power in incongruent trials. To mimic the chaotic distraction environment of in-person office life, we added an additional layer of complexity by floating the words on different visual backgrounds (a calm river, a roller coaster, a calm beach, and a busy marketplace). Both the behavioral and neural data we collected showed consistently different results in incongruent tasks, such as longer reaction times and lower Alpha waves, particularly when the words appeared on top of the marketplace background, the most distracting scene.

Interruption by Notification: It’s widely known that push notifications decrease focus level. In our three Interruption by Notification experiments, participants performed the Stroop Tasks, above, with and without push notifications, which consisted of a sound played at random time followed by a prompt to complete an activity. Our behavioral analysis and focus metrics showed that, on average, participants presented slower reaction times and were less accurate during blocks of time with distractions compared to those without them.

.webp)